In June 2021, I was invited to give a presentation at the Nordic Testing Days about our artificial intelligence-based test automation solutions. I enjoy sharing my experience with others, as more than 40 new employee/summer interns have been mentored and trained by me. The AI topic seems to be quite popular, and one of the common questions that was asked during the Q&A session was how I came up with the idea to implement the AI solution. Well, time flies, and the story I need to tell started from 15 years ago.

My Playtech journey started in 2007, when I was still a student at TalTech University. One sunny summer day, I was invited to an interview in the Videobet Tallinn office. I must admit, the interview was carried out comprehensively and professionally by my future managers Nurit and Adrian. After the interview, Nurit took me on an office tour, where I was told our main products are terminal cabinets, where we could play games every day. I was thinking, what a dream job! Since from my childhood I always had to pay to play games! Even more, she showed me the fridge full of soft drinks and Oreo cookie jars on the kitchen table – these were free for all employees! I made the decision right there – this is a company I must join.

Videobet was still a startup company in many ways back then. For example, one of our main challenges was game stability. Our games were randomly crashing in playing mode, and the terminals often presented blue screens because of network interruptions. To improve the stability, we had no other choice but to play games, whenever possible. After playing over a few thousand game rounds per day, with the melodious exotic game background music ringing in my ears well after we had stopped, we understood we must approach this differently. We started to search for game playing automation tools and after researching, targeted a coordination-based automation tool named “Mouse Recorder” – a third-party tool which records mouse clicks and replays them.

As you are reading this, I know some of you may be laughing about what kind of automation I am talking about. There are two factors to keep in mind that will make it easier to understand. First, our gaming cabinets use embedded Windows OS, some of them are even standalone terminals, which means no internet connections. Secondly, back in 2007, Jason Huggins was still developing Selenium RC. (Time flies, right😊). With all this in mind, you can understand our options were limited. So, we would use “Mouse Recorder” to do functional terminal tests during the day and run non-functional testing at night.

Soon, we found “Mouse Recorder” couldn’t help us in some important scenarios. First, it cannot press the physical hardware buttons on a terminal. Moreover, it is very hard to cover complex game scenarios with this tool, for example gamble rounds, free spins, and all kinds of bonus games in slots games. Additionally, it cannot run multiple games and game stages and last of all, there were no debugging logs. Still, we had tasted the benefits of “Mouse Recorder”, and we didn’t hesitate to start researching how to improve it.

After a few weeks’ researching and development, our legendary Java utility called “NightRun” was born. “NightRun” is an in-house developed tool, which reads XML scripts, generates debugging logs, and controls terminal hardware. This simple tool played a key role in helping us improve game stability. Other than that, it also taught us a great lesson – continuous improvement (Kaizen).

Without “NightRun”, we wouldn’t be able to stabilize games, so

naturally we started to think how to expand the functional test coverage to the

cashier system, the back-office management system, etc. From Watir and AutoIt

to Selenium RC/Webdriver, SWTbot and Appium; from QuickBuild to Gitlab CI; from

Excel to TestRail; we gradually built a fully automated cross-platform

continuous integration testing system which can cover most of our system’s

functional scenarios.

And wouldn’t you know, soon we were facing another big challenge. We had developed an important feature for the customer called “service window” – basically a system, which allows players to login and manage their account information on terminal. The first, most important scenario is to check whether the login page is open or not on terminal, after pressing the “service window” button. Yes, it is as simple as it sounds, just press the button, and verify that the keyboard screen and login screen both appear.

However, the challenging part is that we have integrated hundreds of

third-party games to our platform and different customers request different

game themes. Moreover, we have different types of cabinets which means

different resolutions and to top it all off, we release multiple snapshots of

builds daily! So, the actual number of possible test cases = № Games X №

cabinets X № themes X № builds.

It would take a huge amount of time to cover all possible test cases

manually. Even if we implemented an automation solution, we still need to write

logic to verify expected results for each test case, which is also quite a time-consuming

task, plus legacy code, flaky tests, and upcoming new games might cost us even

more time to maintain the automation scripts. Then, I had an idea: if I had a good

experience when training new employees and interns, how about training a computer

to define the logic to do the tasks? We researched how to train artificial

intelligence models and among all available artificial intelligence libraries

we luckily came across some well-developed image recognition AI libraries.

Our artificial intelligence-based test automation solution is based on a Python library named “ImageAI”, which is composed of two main parts: training and prediction. We developed a Java utility to collect data for 140 games automatically (game pictures in different stages, labeling, sorting) for the training stage, and then we started the model training, where the main parameters are as follows:

- num_experiments - the number of times the trainer will study all the images in the given dataset to achieve maximum accuracy.

- batch_size - refers to the number of images the trainer will study at a time until it has studied all the images in the given dataset.

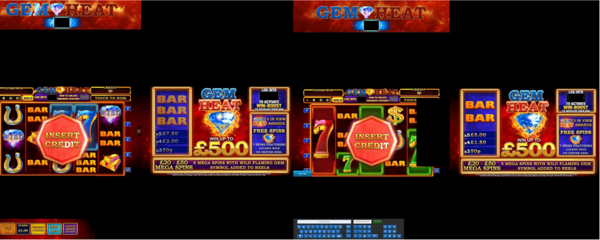

- number_objects - number of different categories under training/test folders. In this case the categories objects are 2: “service window open” and “service window not open”, as seen on images below.

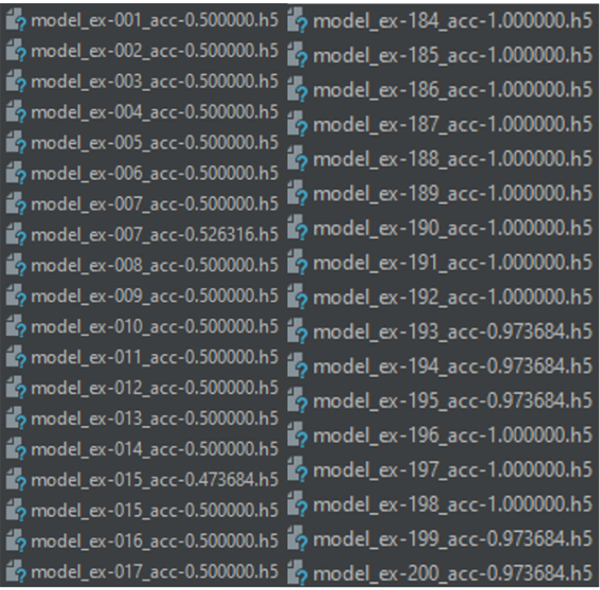

The training results in a collection of models (seen

on the left), where the model’s name includes the experiment number and the

models expected accuracy. A total of 200 models were trained which were defined

by “num_experiments”. From the trained models, we selected the best model for

prediction, which usually had the highest accuracy rate. In the prediction

stage, we also defined the folder that contained test images, and the failure probability

rate.

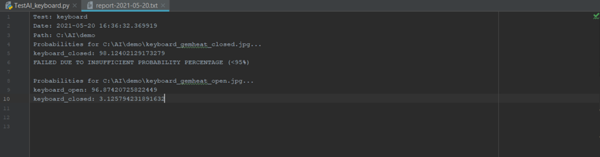

In the demo case below, you can see the test images are from a game

named “Gem Heat” and that the failure probability rate is 95%. The image

recognition AI successfully predicted “service window open” and “service window

not open” scenarios and generated reports automatically.

We have used this solution for testing a few hundred games, and so far, the AI has done a fantastic job and got everything done correctly. After implementing this solution, we have saved massive amounts of time by sidestepping the need to maintain traditional automation test scripts for different game themes and resolutions, and we also don’t have to write new scripts for new integrated games. We are still trying to optimize the system – gathering more test images to expand the number of categories, updating all project dependencies to the latest version, speeding up training with a dedicated GPU, and exploring new features in ImageAI, such as object detection in images and videos.

When I recalled the start of my journey in Videobet, then I have to say that besides a cozy office and free drinks and cookies I loved so much at the beginning (we still have them daily by the way), I really enjoyed working on these projects with my super talented and professional colleagues. Every day I could hear some great ideas or user cases and learn new technologies from them, which inspired me a lot to work on continuous improvement. And last, but not least – credit goes to our management as well, who firmly encourage and support us in adopting cutting-edge technologies and devices.